- DORA Compliance – A Complete Guide by Valency Networks - 31/01/2025

- Is ICMP Timestamp Request Vulnerability worth considering - 31/12/2024

- Understanding Threat Intelligence in ISO 27001-2022 - 21/11/2024

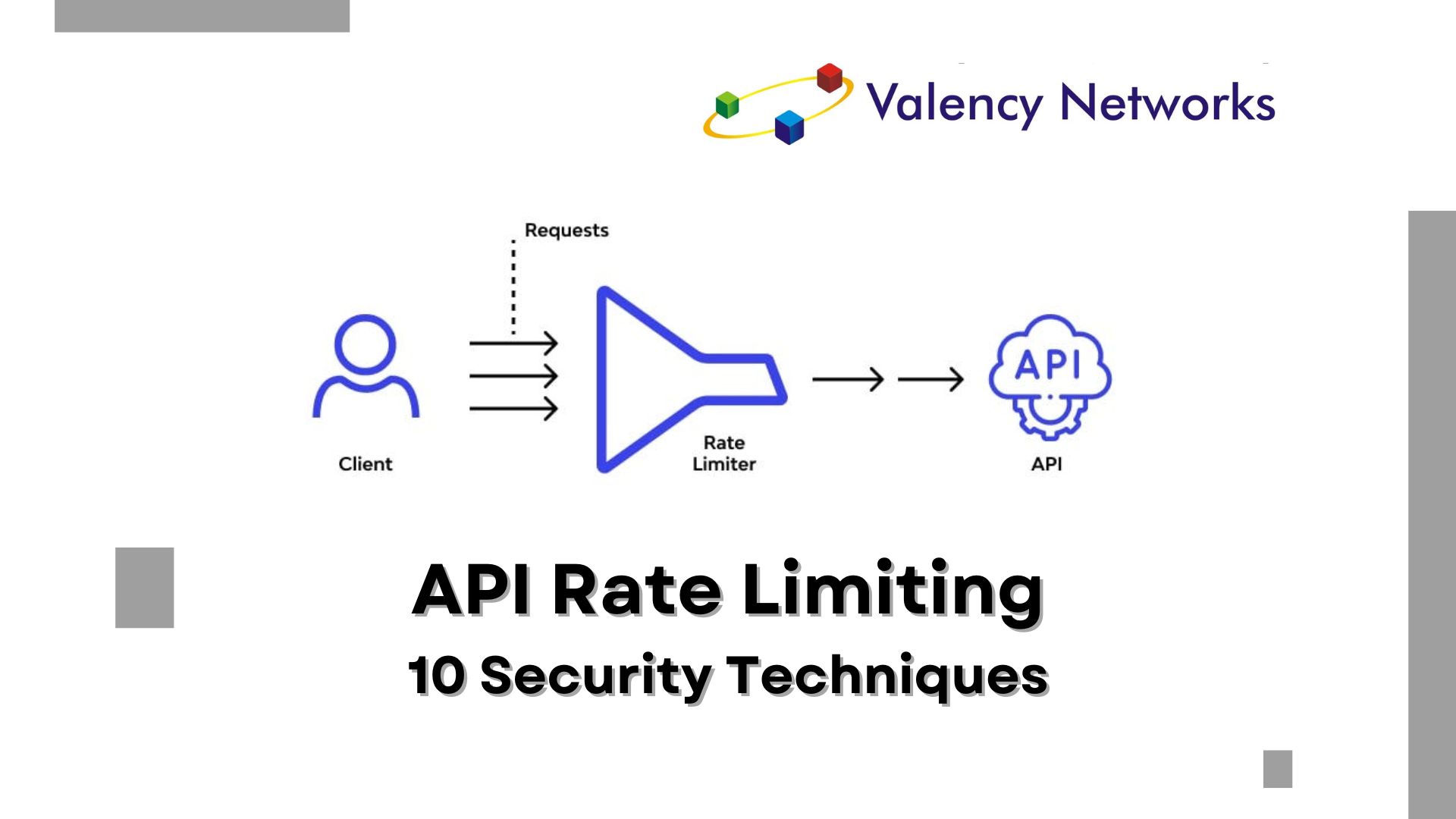

As the Chief Technology Officer at Valency Networks, I understand the critical importance of securing your REST API against misuse and abuse. Rate limiting is a fundamental aspect of API security, allowing you to control access to your resources and prevent overwhelming your servers. In this article, I’ll delve into 10 powerful techniques for implementing rate limiting effectively to safeguard your REST API and maintain optimal performance.

Introduction: Ensuring Secure and Reliable API Access

Rate limiting plays a pivotal role in protecting your REST API from unauthorized access and ensuring fair usage by legitimate users. By imposing restrictions on the number of requests that clients can make within a certain time frame, rate limiting helps mitigate the risk of denial-of-service (DoS) attacks, prevents server overload, and enhances overall system reliability.

1. Token Bucket Algorithm: Precision Control for Request Flow

The token bucket algorithm is a tried-and-tested method for enforcing rate limits with precision and efficiency. By maintaining a token bucket that refills at a fixed rate, you can control the flow of requests and prevent excessive usage of your API resources.

2. Leaky Bucket Algorithm: Smooth Regulation of Request Rate

Similar to the token bucket algorithm, the leaky bucket algorithm regulates the rate of requests by allowing a fixed number to pass through at regular intervals. Excess requests are collected in a “leaky bucket” and discarded if the bucket overflows, ensuring smooth and consistent rate limiting.

3. Fixed Window Counting: Setting Thresholds for Request Rates

Fixed window counting involves counting the number of requests made within a fixed time window (e.g., 1 minute) and applying a predefined threshold. Requests exceeding the threshold are rejected or delayed until the window resets, providing effective control over API usage.

4. Sliding Window Counter: Flexibility in Rate Limiting

The sliding window counter tracks the number of requests made over a sliding time window, offering greater flexibility and responsiveness compared to fixed window counting. This approach ensures continuous monitoring and adjustment of rate limits based on real-time usage patterns.

5. Token Bucket with Bursting: Handling Short-Term Surges in Traffic

Token bucket with bursting allows for temporary increases in the rate limit to accommodate sudden spikes in traffic. By providing additional tokens during bursts of activity, this approach ensures that your API can handle short-term surges without compromising overall performance.

6. Dynamic Rate Limiting: Adaptive Control Based on User Behavior

Dynamic rate limiting adjusts the rate limit dynamically based on factors such as user authentication, usage patterns, or subscription plans. This adaptive approach ensures personalized and responsive API access while maintaining security and fairness.

7. Per-Endpoint Rate Limiting: Tailoring Limits to Endpoint Requirements

Per-endpoint rate limiting enables you to set custom rate limits for each endpoint based on its specific requirements and usage patterns. This granular approach ensures optimal resource allocation and prevents individual endpoints from being overwhelmed by excessive traffic.

8. Response Code Rate Limiting: Targeting Specific API Responses

Rate limiting can be applied based on the response code returned by the API server. Requests resulting in error responses (e.g., 429 Too Many Requests) can trigger rate limiting to throttle subsequent requests from the same client, preventing abuse and ensuring reliability.

9. Client Identification and Key-based Rate Limiting: Individualized Access Control

Assigning unique identifiers or API keys to clients allows for individual rate limits to be enforced per client. This approach ensures that each client’s usage is independently tracked and regulated, enhancing security and accountability.

10. Adaptive Rate Limiting: Responding to Changing Conditions

Adaptive rate limiting dynamically adjusts the rate limit based on the current system load, network conditions, or other relevant factors. By continuously monitoring the environment, adaptive rate limiting ensures optimal performance and responsiveness while preventing overload or degradation.

Conclusion: Strengthening Your API Security Posture

With these 10 techniques at your disposal, you can confidently protect your REST API from unauthorized access, ensure fair and equitable usage for all users, and maintain the reliability and integrity of your services. At Valency Networks, we’re committed to helping you optimize your API security posture and achieve your business objectives. Contact us today to learn more about our comprehensive API security solutions.